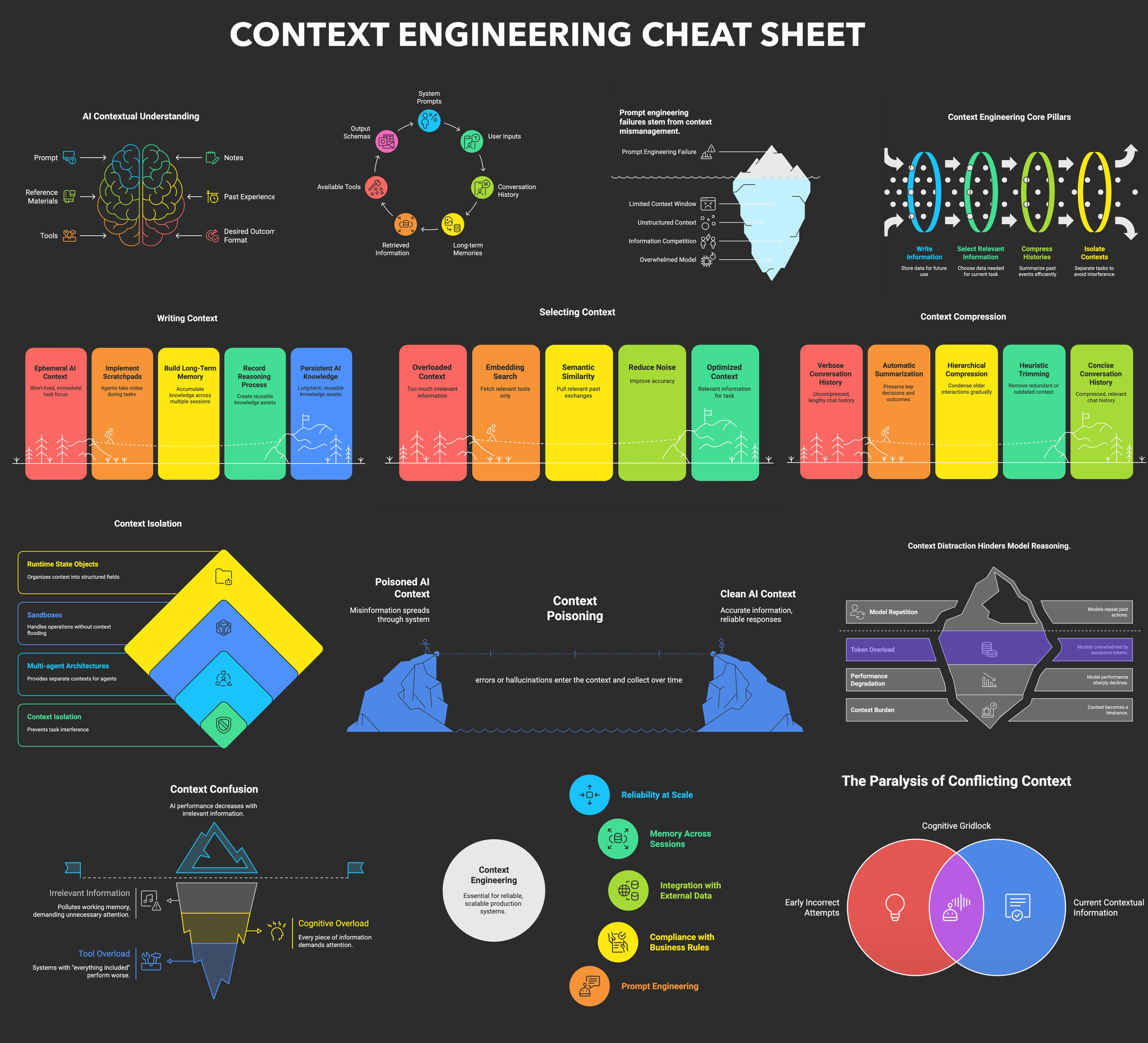

Context engineering is the art and science of managing what information is included in the context window of large language models (LLMs) and AI agents at each step of their operation. As LLMs become more capable and agents more autonomous, effective context engineering is essential for performance, cost, and reliability.

What Context Engineering Actually Is

Context engineering is the systematic design of AI systems that:

- Capture relevant information from every interaction

- Store this information in structured, retrievable formats

- Analyze patterns and relationships across data points

- Apply insights to improve future interactions

- Evolve understanding over time through continuous learning

Context Data Types and Collection Strategies

{

"customer_id": "unique_identifier",

"preferences": {

"communication_style": "direct",

"preferred_channels": ["email", "sms"],

"timezone": "EST",

"language": "en-US",

"accessibility_needs": []

},

"behavioral_patterns": {

"peak_activity_times": ["9-11am", "2-4pm"],

"typical_session_length": "15-20 minutes",

"decision_making_style": "analytical",

"response_time_expectations": "immediate"

},

"relationship_history": {

"customer_since": "2022-03-15",

"lifetime_value": 15000,

"satisfaction_score": 4.7,

"escalation_triggers": ["billing issues", "technical problems"]

}

}Six Specialized Memory Types:

- Core Memory: Essential system context

- Episodic Memory: User-specific events and experiences

- Semantic Memory: Concepts and named entities

- Procedural Memory: Step-by-step instructions

- Resource Memory: Documents and media

- Knowledge Vault: Critical verbatim information

Extending the LLM Context

Long Context Window Extensions

Technical Methods:

-

Modified Positional Encoding: Techniques like RoPE (Rotary Position Embedding), ALiBi, and Context-Adaptive Positional Encoding (CAPE) that allow models to handle longer sequences

-

Efficient Attention Mechanisms: Methods like Core Context Aware Attention (CCA-Attention), which reduces computational complexity while maintaining performance

-

Memory-Efficient Transformers: Approaches like Emma and MemTree that segment documents while maintaining fixed GPU memory usage

A Survey of Context Engineering for Large Language Models

Components of Context Engineering

- Context Retrieval and Generation

- Context Processing

- Context Management

StreamingLLM

When the LLM process the conversation of long it will not focus the past conversation which is out of the context window when responding to the user to sovle this

Streaming LLM where they allocate a predefined space called attention sink of let say 512tokens to keep the important message that required for long conversation it also changed based on current conversation it will keep get updated so the LLM would able to answer properly

let say we have said i am going to london on tonight and after few conversation when LLM is processing it leave the old the message that is out of context window so if we ask i wont able to answer but streaming LLM will keep the important message in context window.

Best resources to check

LongMemEval benchmark

Benchmarking Chat Assistants on Long-Term Interactive Memory (

| Memory Component | Purpose and Function | Key Information Stored |

| Core Memory | Stores high-priority, persistent information that must always remain visible to the agent, divided into persona (agent identity) and human (user identity) blocks. It maintains compactness by triggering a controlled rewrite process if capacity exceeds 90%. | User preferences, enduring facts (name, cuisine enjoyment). |

| Episodic Memory | Captures time-stamped events and temporally grounded interactions, functioning as a structured log to track change over time. | Events, experiences, user routines, including event type, summary, details, actor, and timestamp. |

| Semantic Memory | Maintains abstract knowledge and factual information independent of time, serving as a knowledge base for general concepts, entities, and relationships. | Concepts, named entities, relationships (e.g., social graph), organized hierarchically in a tree structure. |

| Procedural Memory | Stores structured, goal-directed processes and actionable knowledge that assists with complex tasks. | How-to guides, operational workflows, step-by-step instructions. |

| Resource Memory | Handles full or partial documents and multi-modal files that the user is actively engaged with (but do not fit other categories). | Documents, transcripts, images, or voice transcripts. |

| Knowledge Vault | A secure repository for verbatim and sensitive information that must be preserved exactly, protected via access control for high-sensitivity entries. | Credentials, addresses, phone numbers, API keys. |

Context compressoer

Agentic Context Engineering

we have 3 agents

-

Generator: This is the agent’s “brain” during execution. It’s the component that receives the original task (e.g., “Split the cable bill among roommates”) and attempts to solve it using the current context the accumulated playbook. It doesn’t have the full answer. It doesn’t know every rule. It only has what’s been written so far in the playbook: a collection of past bullets. The Generator reads the playbook, applies any relevant insights, generates a reasoning trace, and outputs code or a response.

-

Reflector: This is where the learning begins. The Reflector receives the Generator’s full trajectory—and, if available, the ground truth. It’s not a chatbot summarizing what happened. It’s an expert auditor.

-

Curator: The Curator takes the Reflector’s diagnostic—as well as the current playable and decides what to add, not what to rewrite.

check here

Resources

- https://github.com/raphaelmansuy/tutorials/blob/main/37-contex-engineering-for-sales-agent.md

- https://boristane.com/blog/context-engineering/

- https://sevant.ai/get-started

- Memory Management in LLMs and Agents

- https://github.com/Meirtz/Awesome-Context-Engineering

- A comprehensive template for getting started with Context Engineering - the discipline of engineering context for AI coding assistants so they have the information necessary to get the job done end to end.

- https://rlancemartin.github.io/2025/06/23/context_engineering/

- Context Engineering - What it is, and techniques to consider

- https://manus.im/blog/Context-Engineering-for-AI-Agents-Lessons-from-Building-Manus

- A Survey of Context Engineering for Large Language Models

- https://factory.ai/news/compressing-context