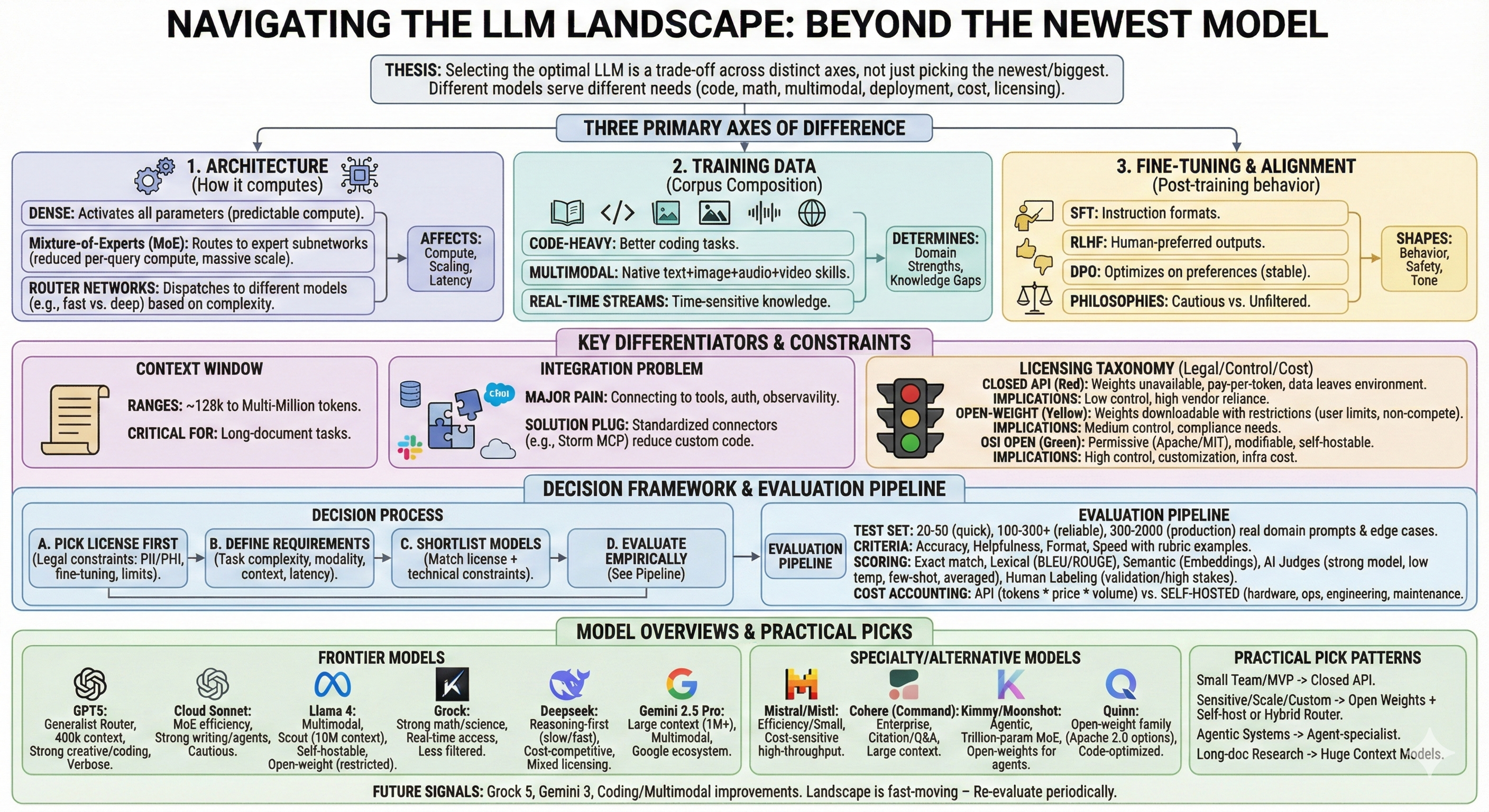

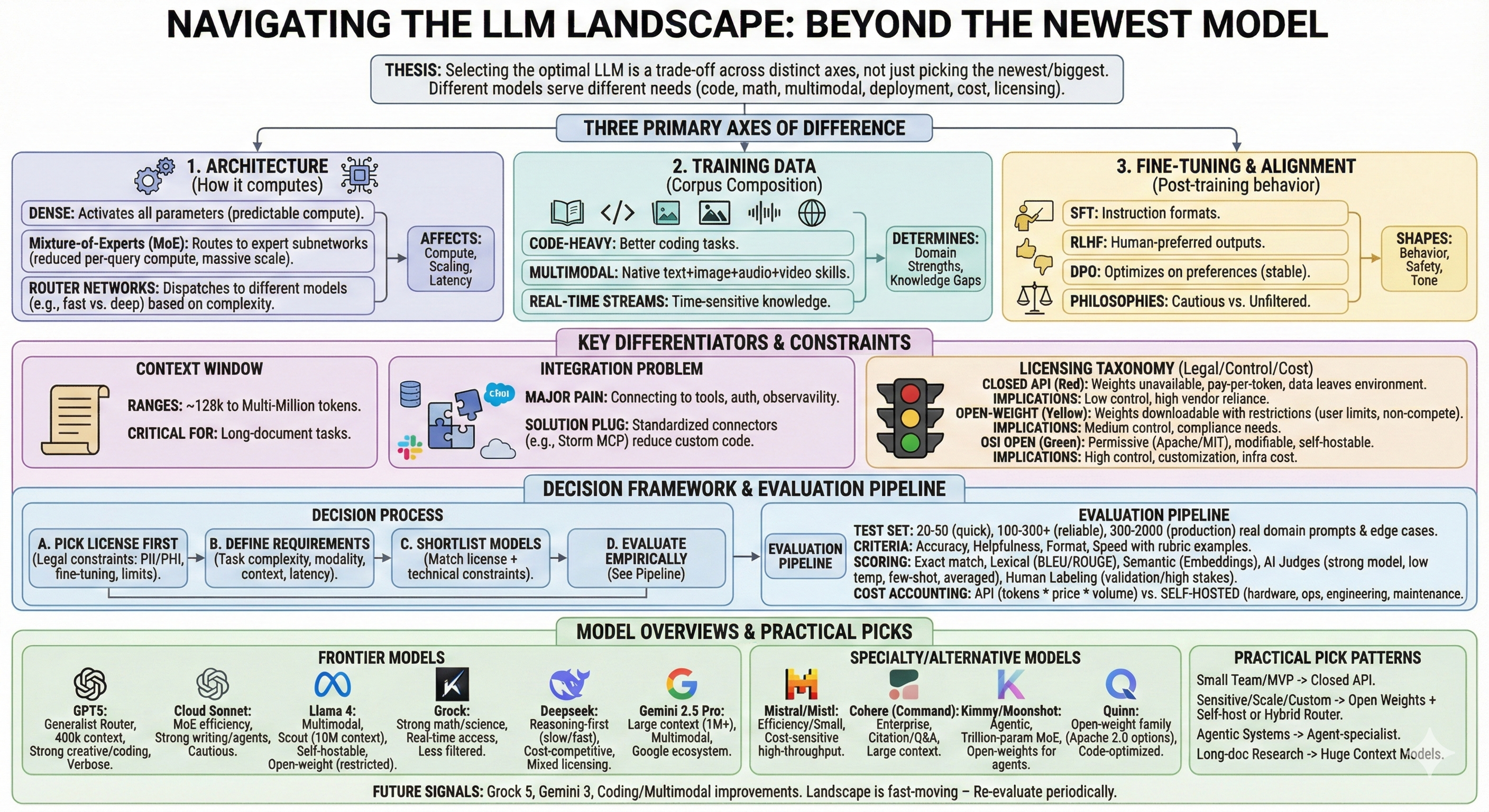

- Thesis / motivation

- Picking the newest/biggest LLM is not always optimal. Different models have distinct tradeoffs (code, math, multimodal, deployability, cost, licensing).

- Three primary axes that make models different

- Architecture — how the model computes (dense vs MoE vs router networks); affects compute per query, scaling, and latency.

- Training data — corpus composition (web, code, images, audio, live streams) which strongly determines domain strengths and knowledge gaps.

- Fine-tuning & alignment — post-training steps that shape behavior: supervised fine-tuning (SFT), RLHF, DPO and vendor alignment philosophies (e.g., constitutional AI, minimal filtering).

- Architecture details called out

- Dense models activate all parameters for every input (predictable compute).

- Mixture-of-Experts (MoE) routes inputs to a subset of expert subnetworks to reduce per-query compute while enabling very large parameter counts.

- Router/ensemble approaches (e.g., GPT5) dispatch queries to different models (fast vs deep) depending on complexity.

- Context window importance

- Context window = how much text the model can consider at once. Examples mentioned range from ~128k tokens to multi-million tokens (affects long-document tasks).

- How training data shapes strengths

- Model A trained heavily on code will perform better on coding tasks. Multimodal training (text+images+audio+video) yields native multimodal skills. Real-time streams give time-sensitive knowledge.

- Fine-tuning & alignment approaches and effects

- SFT teaches desired instruction formats. RLHF biases toward human-preferred outputs. DPO optimizes directly on preferences (more stable). Vendor philosophies (more/less filtering) produce models that are cautious or unfiltered.

- Licensing taxonomy & why it matters (legal/control/cost)

- Closed API: weights unavailable, you pay per token, data leaves your environment unless enterprise agreement.

- Open-weight (custom license): weights downloadable but with restrictions (e.g., user limits, “no competitor” clauses).

- OSI open: permissive licenses (Apache/MIT) allowing modification, self-hosting, commercial use.

- Implications: compliance (PII/PHI), privacy, customization (fine-tune), cost at scale, vendor lock-in.

- Frontier model overviews (key practical notes)

- GPT5 — generalist, router system, large context (400k tokens), strong creative/coding; sometimes verbose.

- Cloud Sonnet (Anthropic) — MoE efficiency, strong at professional writing and agent workflows, cautious alignment.

- Llama 4 (Meta) — multimodal, Scout variant targets enormous context (10M tokens) and self-hosting; open-weight but restricted license.

- Grock (XAI) — strong math/science, real-time X platform access, less filtered.

- Deepseek — reasoning-first setup (slow accurate + fast light); cost competitive; mixes open/open-weight licensing.

- Gemini 2.5 Pro — large context (up to 1M+), multimodal, integrated with Google ecosystem; strong data/analysis use.

- Specialty/alternative models

- Mistral / Mistl — efficiency/smaller models for cost-sensitive high-throughput.

- Cohere (Command) — enterprise features, citation/Q&A focus, large contexts.

- Kimmy / Moonshot — agentic / trillion-parameter MoE with open weights aimed at agents.

- Quinn (Alibaba) — open-weight family with Apache 2.0 options, code-optimized variants.

- Decision framework (practical process)

- Step A — pick license first. Legal constraints (PII, PHI, fine-tuning needs, user limits) should eliminate options early.

- Step B — define technical requirements. Task complexity, modality, context size, latency, deployment constraints.

- Step C — shortlist models that meet license + technical constraints.

- Step D — evaluate empirically. See next step.

- Evaluation pipeline (detailed procedure)

- Test set: 20–50 minimum prompts for quick checks; aim for 100–300+ (or 300–2000 for production-grade reliability) using real domain data and edge cases.

- Criteria/rubric: define accuracy, helpfulness, format compliance, speed. Create concrete rubric examples for each score level.

- Scoring methods: exact match for rigid tasks; lexical metrics (BLEU/ROUGE) when references exist; semantic similarity (embeddings + cosine) for flexible answers.

- AI judges: use a strong model to evaluate outputs (works without references). Use low temperature and few-shot examples to improve consistency; average multiple runs to reduce variance.

- Human labeling: necessary for final validation or when stakes are high.

- Cost accounting

- API cost = input_tokens * input_price + output_tokens * output_price × monthly_volume.

- Self-hosted cost = GPU/hardware amortization, infra ops, engineering time, monitoring, and maintenance. Evaluate break-even vs expected volume.

- Practical pick patterns

- Small teams / fast MVP → closed API (GPT5/Claude/Gemini).

- Sensitive data / heavy customization / scale → open weights + self-host (Mistral, Kimmy, Llama variants) or hybrid router (mix of open + closed).

- Agentic/autonomous systems → agent-specialist models (Kimmy, Cloud Sonnet).

- Long-document research or analysis → models with huge context (Llama Scout, Gemini, Grock).

- Future signals (what’s coming)

- Grock 5, Gemini 3, further coding and multimodal improvements; landscape remains fast-moving — re-evaluate periodically.